Creating a good prompt for analysis

Creating good prompts that consistently work is a skill that can be learned. In this chapter we look at some use cases and best practices to construct a good prompt that will help us extract insights, set a score, implement a custom evaluation methodology, or simply summarize something out of documents and open-ended responses received over surveys. This will help in creating a continuous and an automated workflow, where the analysis is performed as soon as data is received, saving huge amounts of time. This also helps turn unstructured data into something that is more structured which is human readable and quantifiable.

A good prompt generally has the following four characteristics

- Constraints - What you don't want the AI model to do

- Emphasis - What should the AI model particularly pay attention to

- Task - A very specific ask on what the AI model is supposed to do

- Context - Providing clear and specific examples on how the AI model should respond

Use case 1. Summary

Imagine a situation where, you want to assign a score based on certain content in the PDF attachment or open-ended responses. This often happens where you have a certain custom calculation methodology. For example, may be you are a fund that invest in companies that are predominantly focussed on green practices. Now, when companies apply for funding you need a way to filter the companies out that are not green focussed.

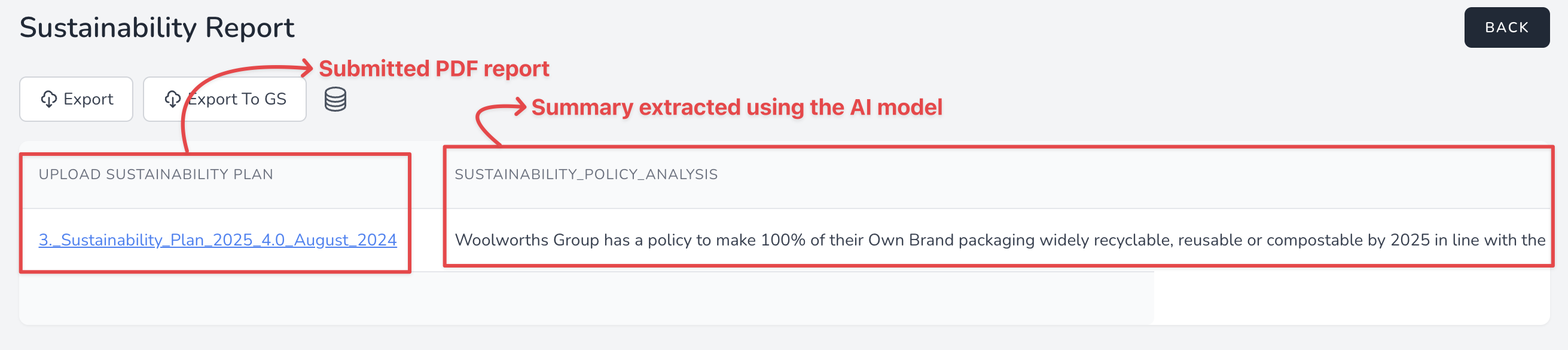

Consider the following prompt that analyzes the sustainability report PDF submitted by an organization.

You should BASE YOUR ANSWERS on the CONTENTS of the PDF only.

Summarize any policy on recyclable packing material.

If nothing is mentioned about recyclable packing material, say "There is no such policy".

If you are not able to identify, say "Not able to identify".

The above prompt is good but still not great.

- Constraint exist as it explicitly states that the analysis should be based on attached PDF only and to not dig into AI's own knowledge

- The task is specific and clear in terms of "Summarizing the policy on recyclable materials"

- Emphasis - The words that are capitalized clearly state what the model should focus on.

- Context could've been better by providing some example which this prompt does not.

Use case 2. Labelling and Categorization

Intelligent Cell can be used to extract Themes/Labels or Categories from text content.

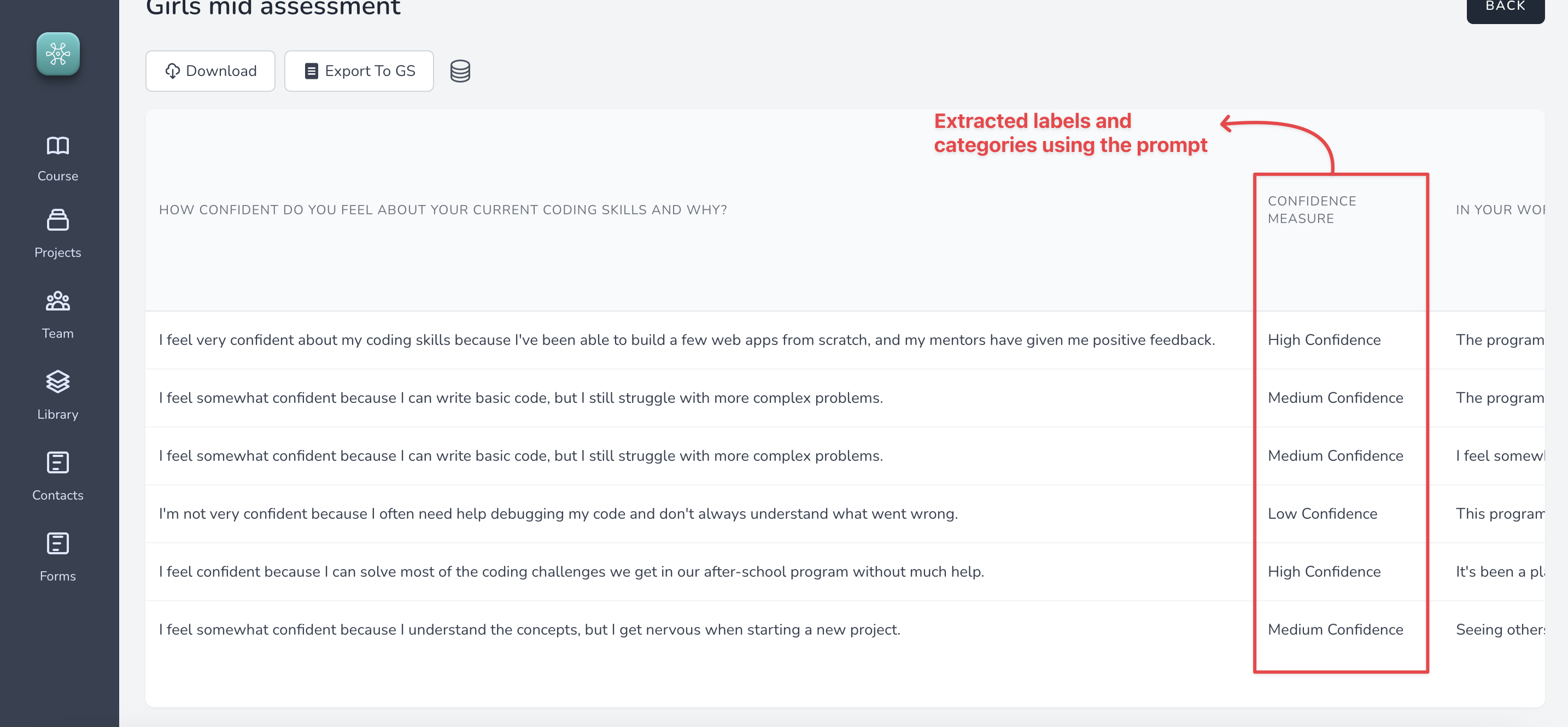

In the below example we are extracting confidence measure from open-ended response received on the following question

How confident do you feel about your current coding skills and why?

The prompt being used is this:

You should base your answers on contents on the response only.

Evaluate the response based on the following conditions

If the response sounds confident say "High Confidence"

If the response sounds medium confident say "Medium Confidence"

If the response sounds low confident say "Low Confidence"

If don't know say "Don't know"

The result:

Use case 3. Scoring

Developing a prompt for scoring is very similar to extracting labels.

Evaluate the response based on criteria below.

You should only use the response to evaluate.

This is the criteria:

1. The strategy document supports the right to self determination.

2. The strategy document acknowledges first nations people.

3. The strategy document has an active policy to partner with first nations communities.

If all three conditions are satisfied say "All conditions satisfied"

Even if one condition is not satisfied say "Doesn't meet expectations"

If you don't know say "Dont know"

Use case 4. Evaluation criteria

Implementing a custom evaluation criteria is super easy. An example prompt is provided below.

Evaluate the response based on criteria below.

You should only use the response to evaluate.

If the STRATEGY DOCUMENT supports the right to self determination then say "Self determination exists"

If there is no mention of self determination then say "Doesn't exist"

If you don't know say "Dont know"